Max W.F. Ku

I am a second-year PhD student in Computer Science at the University of Waterloo, Faculty of Mathematics, where I am fortunate to be advised by Wenhu Chen and Yuntian Deng. I work on visual content generation. Previously I have interned in NVIDIA Deep Imagination Research.

At the heart of my work is a simple but ambitious goal:

To make generative visuals fully controllable across science, communication, and creative applications.

While visuals remain my core focus, I am increasingly curious about how they can integrate with physical reasoning and scientific understanding. I believe controllability in generative models should go beyond aesthetics, extending to physical coherence and alignment with how we perceive the world.

My research interest spans

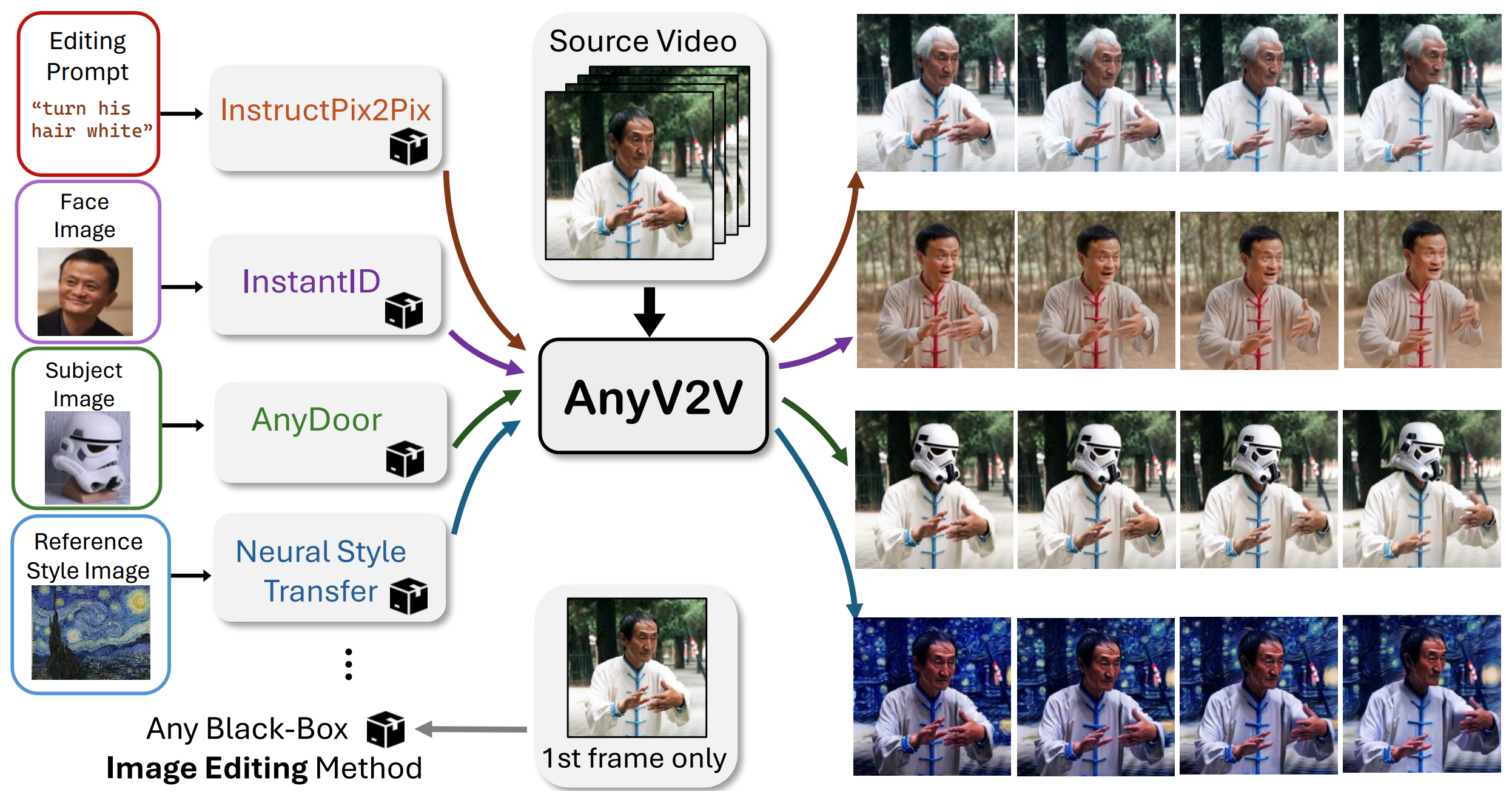

- Controllable Editing and Generation (I prioritize editing over generation)

- World Models (Games, Agent, Physics, …)

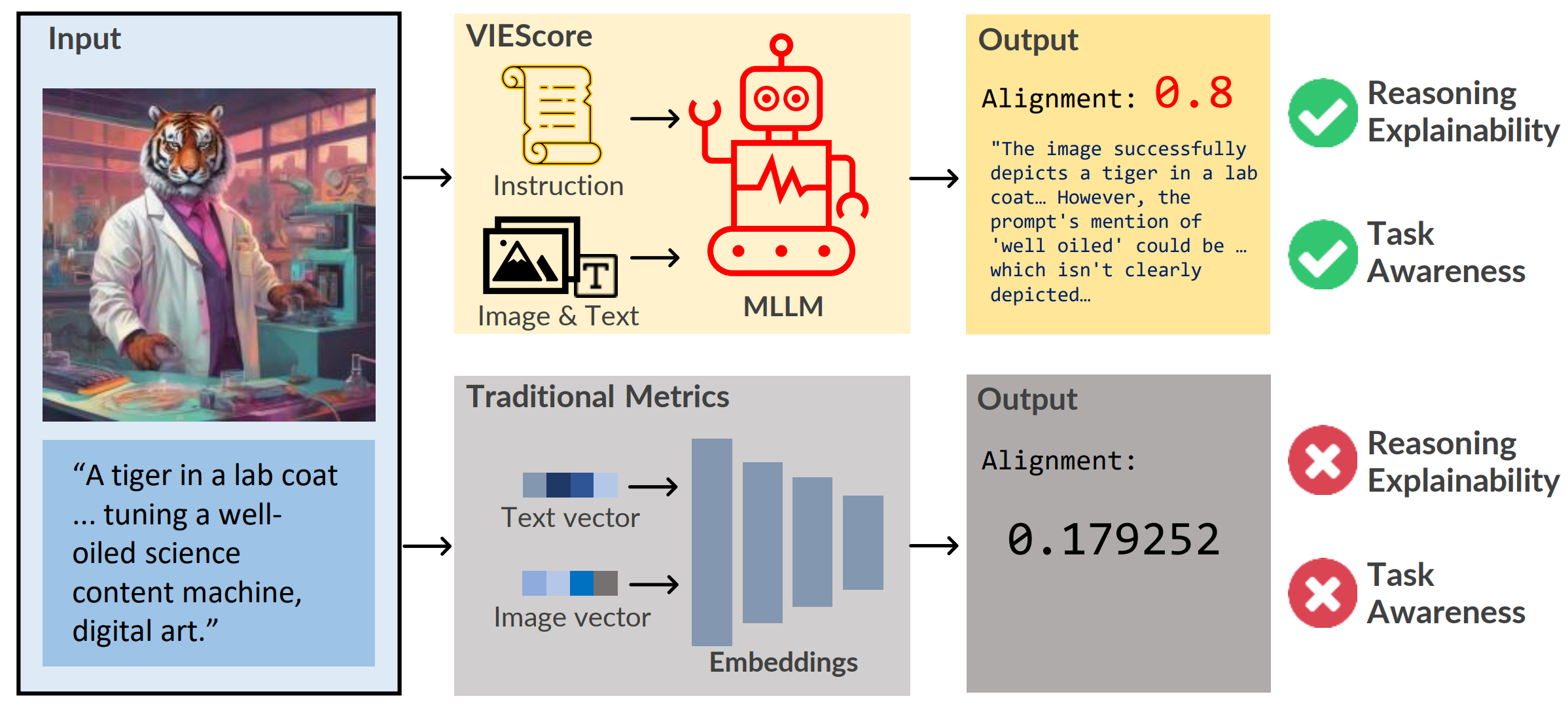

- Interpretability and Explainable AI

- Creative Applications in Entertainment, Education, and Science

Professional Activities

- Reviewed for: ICLR, NeurIPS, ICML, SIGGRAPH Asia, SIGGRAPH, TVCG, ACL, EMNLP, TMLR

Community

- I lead GGG, a community-driven group dedicated to sharing and discussing papers on Generative AI.

- I host 1:1 Online Coffee Chat to share advice with students from underrepresented backgrounds.

Misc

- Vines’ Log, where I keep my reading notes and various logs.

- I was a member of the HK PolyU Robotics Team during ABU Robocon 2019-2021. A playlist.

- I used to compete in official gaming tournament UGC League Team Fortress 2 Highlander.

- “Wing Fung” (with the space) is my first name and “Ku” is my last name. “Max” is the commonly used “english name” that is not part of my legal name. This is common in Hong Kong.

- Project Moon Fans: We are not cavemen, we have technology.

news

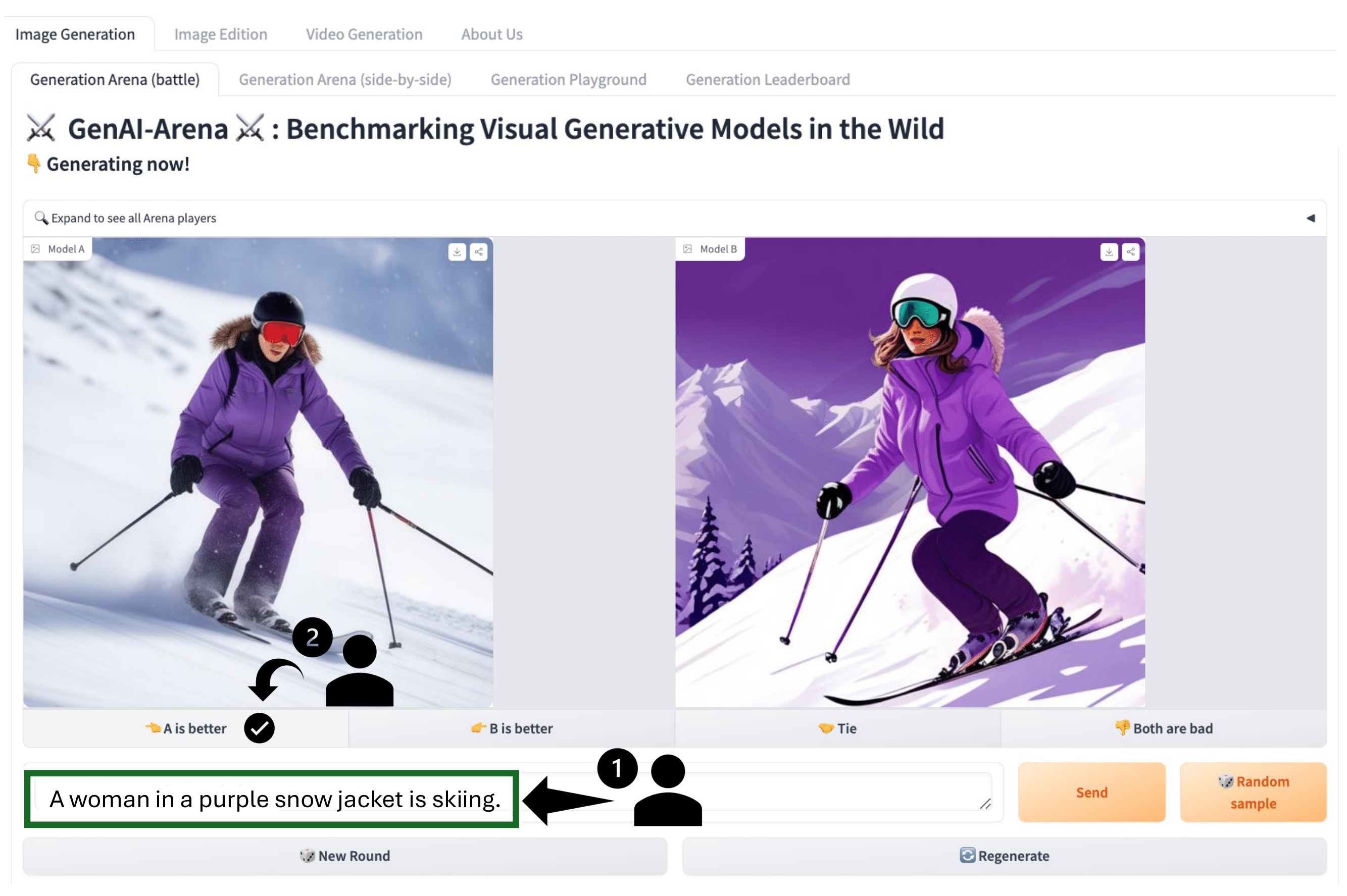

| Jan 25, 2026 | ImagenWorld and EditReward Accepted to ICLR 2026. |

|---|---|

| Jun 15, 2025 | Achieved a total of 1000 citations. |

| Jun 11, 2025 | DisProtEdit got accepted to 2025 ICML GenBio workshop and FM4LS workshop. |

| Jun 02, 2025 | Joined NVIDIA Deep Imagination Research as an intern for Summer 2025. |

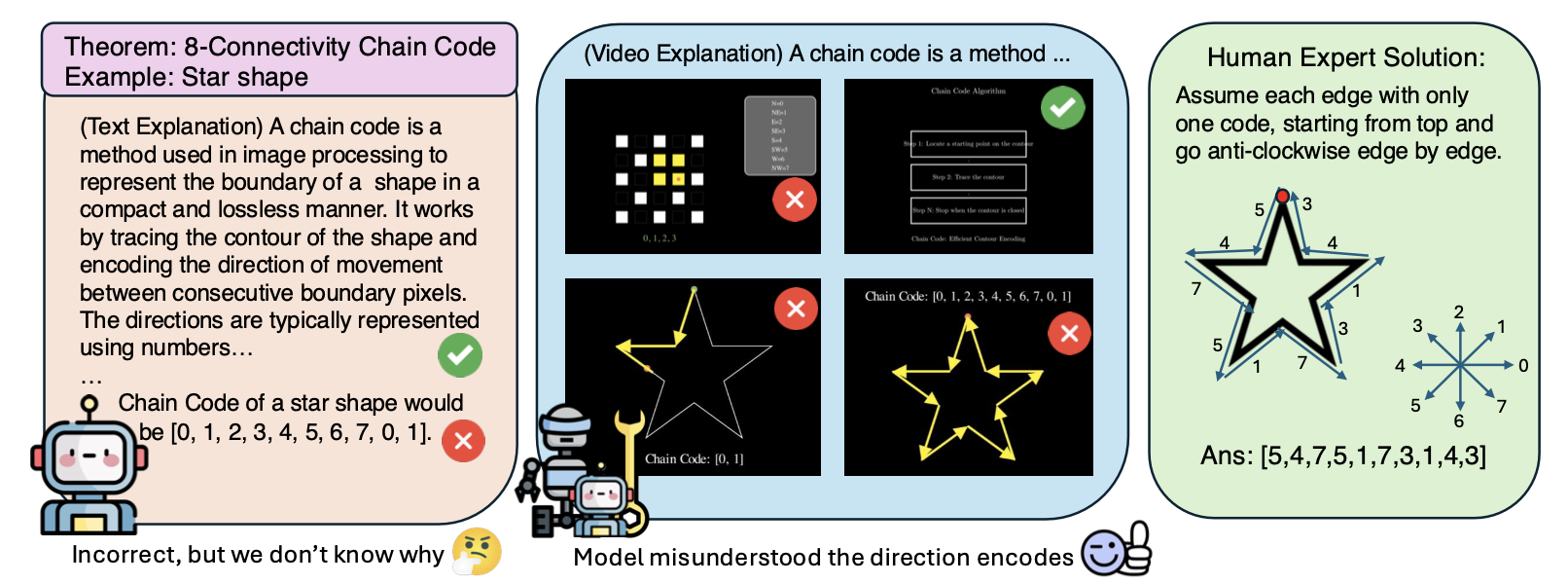

| May 15, 2025 | TheoremExplainAgent got accepted to ACL 2025 Main (Oral)! |